A nicely developed assessment model requires considerable resources in terms of time and professional expertise. However, we believe it is a significant contribution to the team and business development, that is definitely worth it.

Railsware invests a significant part of the company’s income in the team and products. It is obvious that the growth of engineering expertise directly affects the success and profitability of the entire company. The more income we earn, the higher the level of compensation. We have developed our own model of annual performance review, which is based on this correlation.

The Railsware approach was developed over seven years ago. As our business grows, processes improve and new roles emerge, the assessment method is being constantly updated.

The Process

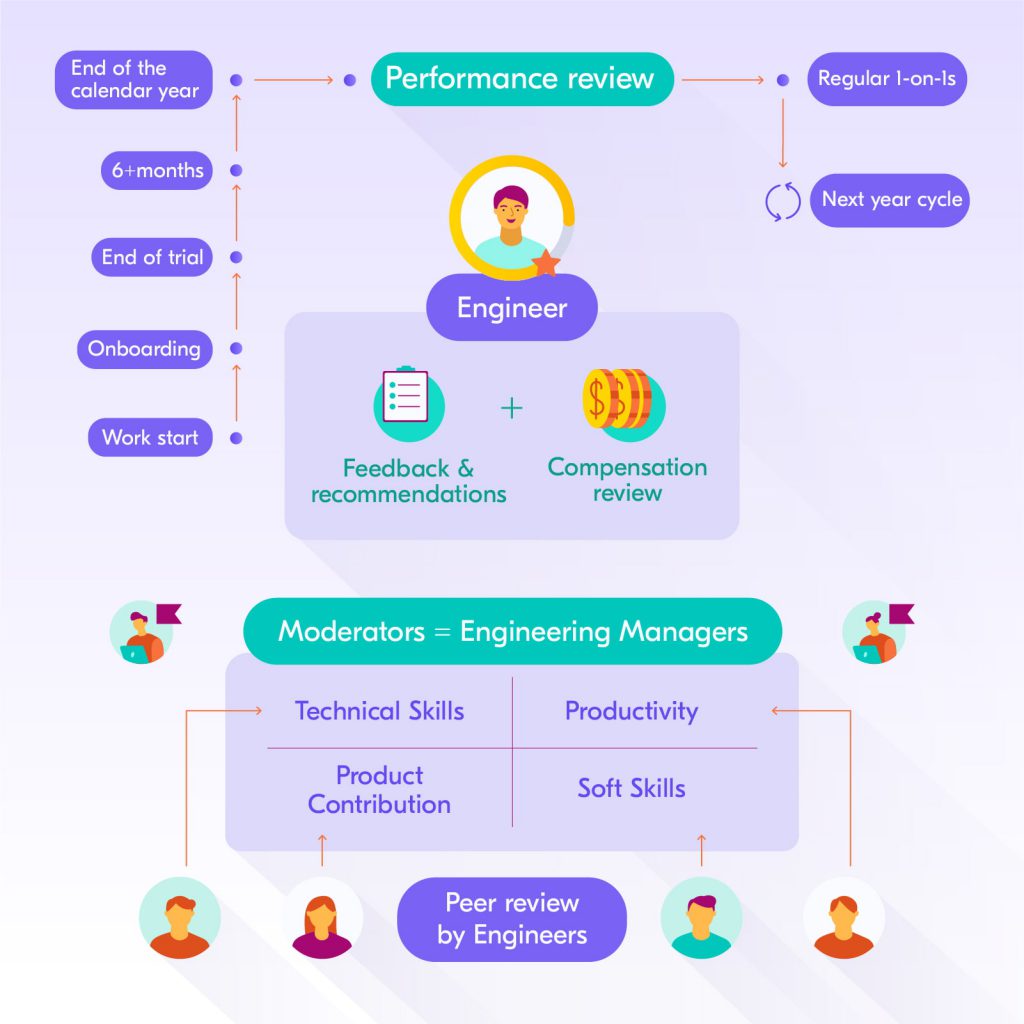

We believe that engineering performance reviewed by the manager can become quite subjective. Being unaware of the whole picture and the feedback from other team members, one person may be biased. That is why we decided to focus on the peer review model, where engineer evaluation is based on the feedback from other engineers. The engineering management team acts as a moderator of the process.

We developed a matrix with the core skills, competencies, and areas of responsibility for the Product Engineering teams. These teams don’t have Project Managers and are independently responsible for the development process, planning, scoping, and product architecture.

According to this matrix, engineers assess the colleagues they’ve been working with during the past period. They define the level of each skill in accordance with the estimation scale.

Constructive feedback must be attached to each evaluation: what exceeded or was below expectations, what could have been done better and why. This way all initiatives completed by the team will be taken into account. E.g. an engineer proactively configured a CI Pipeline, accelerated test suite, or sorted out all the bottlenecks of the planned feature and helped break it down into detailed tasks – all this should be considered during the performance review.

Peer review metrics help to define an engineer’s position on the growth scale, relevant level of compensation, and what one should focus on for future development. The performance review results in compensation revision and regular feedback for each engineer. The level of compensation is defined according to the company’s growth and holistic feedback.

There are 3 things I like in the Railsware performance review process:

- It is regular. There is no reason to have awkward talks and ask for a salary review. All happens automatically.

- It is transparent. All the assessment categories are known and well described from the first day. I can focus on the interesting activities that help to boost my skills.

- It brings actionable feedback from my colleagues with good and to-improve points.

Olexander Paladiy

Product Manager

Skill Matrix

Railsware Engineer Assessment System is a skill matrix. We had two goals in mind while developing it:

- Show the ways of professional development to the team

- Provide support in career growth and create a compensation enhancement model

Taking into consideration the needs of product engineering teams and business goals, we came up with four main skills to assess. Each has three categories.

1. Technical Skill. A basic engineering skill containing the breadth and depth of the technical stack knowledge. This includes:

- Core engineering – understanding and usage of data structures, design patterns, refactoring, testing, and TDD, dealing with technical debt.

- Deep expertise – level of knowledge of main technologies, frameworks, and libraries, complexity and uniqueness of the solved tasks, experience in improving application performance.

- Broad knowledge – the ability to come up with alternative approaches and architectures. Knowledge of other programming languages, frameworks, and databases, along with the ability to propose and implement it in the proper way. An engineer should understand the pros and cons of each proposed technology, see how it fits the current project.

2. Productivity. This is about an engineer’s productivity while working on a product. Both individual and team impact is being assessed here. Productivity is not about the number of lines of code written per hour. Its meaning lies in the quality and stability of the implemented solutions and following established team code review practice:

- Speed of delivery – meeting planned deadlines or getting ahead of schedule. The skill of breaking tasks into smaller manageable parts is also assessed here.

- Stability of output – the solution stability, the number of changes made after review, as well as the ability to unblock oneself and others.

- Speed of learning and obtaining new context – time required to deal with a new stack or project. Another thing that counts here is helping others learn a new context: writing documentation and proactive work in pairs.

3. Product Contribution. Here we assess all tech leadership activities that go outside of the core skill – writing code. There is no Project Manager role in most of our teams. That’s why working directly with a product owner, engineers develop task requirements, do planning and decide on execution. Along with the building development process, engineers are responsible for the application architecture, mentoring, and onboarding.

Such structure requires a good understanding of the product, users, business priorities, and managing technical depth. The more responsibility and involvement in such activities an engineer has, the higher level of his Product Contribution.

Read our Product Engineering article to know more about this approach.

4. Soft skills. Soft skills are often an underestimated part of the engineering team. Mostly it is perceived as the ability to negotiate and find common ground with others. We have divided these skills into three equivalent categories:

- Proactiveness and leadership, decision-making, ownership over the task from requirements level to release, feedback on improving development processes, and so on.

- Communication skills and teamwork.

- General work approach, which we call Work Ethics. Independence, focus on results, reliability, and work transparency are assessed here. It is not related to communication skills but shows if an engineer can be entrusted to solve problems independently.

The skillset and performance review method is described in detail to all company engineers, especially newcomers. From the first days, we reach out to all team members to explain how the scale works and answer the following questions: what is essential for the product and business, and how to grow in the company.

Assessment and feedback biases

Feedback should be fundamental for an objective review process. It has to be specific and detailed. Therefore we have highlighted two mandatory sections of holistic feedback: Goods – what went good, To-improves – things that could be improved, accompanied with clear argumentation and possible alternative results.

Biases often arise when developing feedback or during the assessment. This can hinder objectivity. It can happen either because of a lack of information or an excessive concentration on recent events.

Common evaluation problems and how we deal with them

Time Bias

Performance reviews take place every 12 months. Skills level and all results achieved during the past calendar year are taken into consideration.

However, sometimes feedback is shaped shortly before the performance review process, while only recent achievements of the last 1-2 months remain in the memory. Conversely, peer feedback may be based on events that happened a long time ago but are stuck in memory. Some great achievements in the long past remain in our memory and affect all skills and performance estimates in the future.

Over Strictness or Leniency

Some people can be too demanding to other team members and treat minor mistakes too critically. E.g. if one forgets to fix linter warnings before creating a pull request it is not a reason to evaluate an overall technical skill as low.

Others can miss critical things such as a lack of tests or obvious performance problems and over-assess the teammate. It is important to understand which group of skills a contribution belongs to and avoid evaluating all skills simultaneously.

Averaging the team

Every time there is an “average” score on the scale, people are tempted to use it, just for a quick evaluation. It happens when a reviewer doesn’t have enough feedback and has no time or desire to collect it.

And this is not about the average estimation itself, but about the lack of argumentation and constructive feedback. Such evaluations bring no value to the end-users of the performance review process – the engineering team. The average score must be supported by a list of goods/to-improves and have enough explanations for why this skill is not above/below average.

A detailed skill matrix and a clear understanding of the engineer role in the team help to avoid averaging estimations.

In our performance review process, I like the elimination of biases the most. This is achieved by working in review groups and doing the planning poker.

You might have a strong (and sometimes, too emotional) opinion on some features of the engineer you evaluate. But your review groupmates usually make it more impartial and weighted.

Viktor Zahorodnii

Full Stack Engineer

How we avoid evaluation biases

The engineering management team is responsible for creating the scale of skills and developing the performance review process itself. This team also acts as a moderator during the peer review, helping to avoid biases.

The review is performed in several groups with up to 4-5 engineers in each. Each participant estimates skills in categories for the team members, supporting evaluation with feedback. After this, a final assessment is displayed, using the planning poker method. It is similar to how the team does the task estimation during the sprint.

This way all participants of the review process moderate each other, minimizing biases and improving the overall understanding of the skill matrix. As a result, a group review becomes more objective.

Additional questions help to clarify the details of some cases, and feedback from team members gives an overview from different perspectives. Each skill of the matrix has its specific list of questions, which helps to assess the skill. E.g., if a team member suggested to refactor the main part of the codebase, one should understand how it was explained to the product owner, how it was planned, who was in charge of the task execution.

It is important to describe each case in detail to define skills level according to the scale.

Recap

The performance review method at Railsware helps to define engineer’s engagement in projects, direct responsibilities, and company life: onboarding for newcomers, participation in side-projects, and team development. All this affects the compensation level and every engineer sees direct dependency of the compensation on the scope of work.

Of course, there will always be a bit of subjectivity, however, we believe that this can be managed. Our model is based on data and cross-functional feedback which makes the whole review process transparent.

We believe this model helps to motivate Railswarians. On average people stay in the company for 5 years and this is the best proof.